I've started a new postdoc, working on a collaboration between the O'Leary, Ziv, and Harvey labs, on the Human Frontiers Science Program grant, "Building a theory of shifting representations in the mammalian brain".

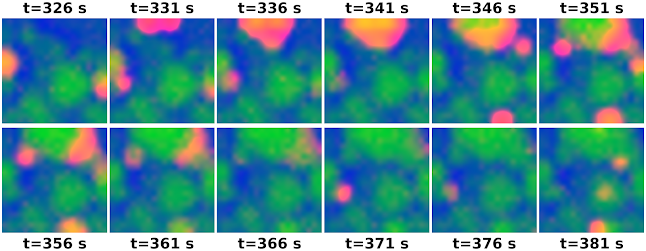

To start, I've been working with Adrianna Loback to try to make sense of a puzzling result from Driscoll et al. (2017): the neural code for sensorimotor variables in parietal cortex is unstable, changing dramatically even for habitual tasks in which no learning takes place.

We think the brain might be using a distributed population code. Because there are so many possible ways to read-out a distributed and redundant population code, it could be that there is a stable representation at the population level, despite the apparent instability of single neurons.

I'll be presenting our work to date as a poster at the UK Neural Computation conference in Nottingham, July 1st-3rd.

[download poster PDF]

Abstract:

Recent experiments reveal that neural populations underlying behavior reorganize their tunings over days to weeks, even for routine tasks. How can we reconcile stable behavioral performance with ongoing reconfiguration in the underlying neural populations? We examine drift in the population encoding of learned behaviour in posterior parietal cortex of mice navigating a virtual-reality maze environment. Over five to seven days, we find a subspace of population activity that can partially decode behaviour despite shifts in single-neuron tunings. Additionally, directions of trial-to-trial variability on a single day predict the direction of drift observed on the following day. We conclude that day-to-day drift is concentrated in a subspace that could facilitate stable decoding if trial-to-trial variability lies in an encoding-null space. However, a residual component of drift remains aligned with the task-coding subspace, eventually disrupting a fixed decoder on longer timescales. We illustrate that this slower drift could be compensated in a biologically plausible way, with minimal synaptic weight changes and using a weak error signal. We conjecture that behavioral stability is achieved by active processes that constrain plasticity and drift to directions that preserve decoding, as well as adaptation of brain regions to ongoing changes in the neural code.

This poster can be cited as:

Rule, M. E., Loback, A. R., Raman, D. V., Harvey, C. D., O'Leary, T. S. (2019) Constrained plasticity can compensate for ongoing drift in neural populations. [Poster] UK Neural Computation 2019, July 1st Nottingham, UK